Sanity testing is the testing to check major functionalities of the application to validate whether the application is ready for testing or not. It is a rapid test to validate whether a particular application or software produces the desired results or not. It is not in-depth level testing. Before undergoing the Sanity testing software has to pass the other kinds of testing. Sanity testing is more depth than Smoke testing.

Sanity testing usually includes a suite of core test cases of basic GUI functionality to demonstrate connectivity to application server, database, printers etc.

When a new build is obtained after fixing the some minor issues then instead of doing complete regression testing sanity is performed on that build. In general words Sanity testing is a subset of regression testing. Sanity testing is performed after thorough regression testing is completed, to ensure that any defect fixes does not break the core functionality of the product.

It is performed towards the end of the product release phase such as before Alpha or Beta testing. Sanity testing is performed with an objective to verify that requirements are met on not. Sanity test is normally unscripted. Sanity testing is a subset of Acceptance testing.

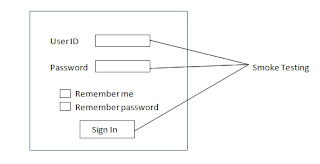

Sanity testing is the term which is correlated to Smoke testing but they are diverse. One similarity between these two is that both are used as criteria for accepting or rejecting the new build.

|

| Sanity Testing |

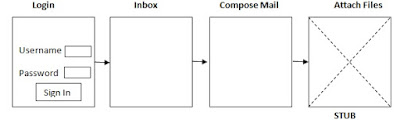

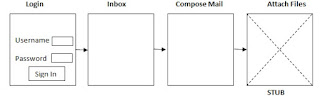

If sanity test cases fail then deployed build is rejected because if the deployed build is not having the required changes then there is no point of doing regression testing on the deployed build. Smoke is being part of regression testing which validates the crucial functionality whereas sanity testing is part of acceptance testing which validates whether the newly added functionality is working or not. Usually smoke is performed on relatively unstable build or product while sanity testing is done on the relatively stable build or product. Moreover generally only one of them is performed but if required both can be performed. When both need to perform then Smoke testing is performed first following Sanity testing.

Examples of Sanity testing:

- Database connectivity among other modules of the application or software

- Identification of missing objects

- Check for missing errors from previous build

- Testing on the application servers

- Slow function issues

- Database crash issues

- System termination

Sanity testing factors:

- Environment issues

E.g. Application closing, application getting hang, unable to launch URL

- Exceptional errors

E.g. java.io.exception (some source code will be displayed)

- Urgent severity defects

When to perform Sanity Testing:

After receiving Software build with the minor fixes in code or functionality and there is no enough time for in-depth testing, Sanity testing is performed to check whether the defects reported in previously build are fixed and it is not impacting any previously working functionality. The objective of Sanity testing to validate the planned functionality is working as expected.

Advantages:

- Provides faster results

- Saves time

- Requires less preparation time as they are unscripted

Disadvantages:

- Defect reproducibility is difficult as these are unscripted

- Does not cover testing of entire application in-depth