Integration testing is also called logical extension of unit testing. In other words, two components or modules tested in isolated fashion are combined into a component or module and interfaces between them is tested. As testing progresses few other modules are added to earlier tested module and the interface between them is tested. Integration testing in the software testing model comes before system testing and post the unit testing has been done.

The intention of integration testing is to test the combinations functions or module or pieces of the code. The components are tested in pairs.

Integration testing identifies problems that occur when components or modules are combined. Before starting the integration testing all the units or components must be tested separately.

Purpose of Integration Testing:

1. To check the individual right functionality of the modules

2. To check integrated functionality/ behaviour is achieved or not

3. To check the module are communicating each other as per Data flow Diagram (DFD)

4. To check navigation among the several modules/screens

User can do the integration testing in variety of ways. In general, there are following 3 common integration testing strategies.

1. Top down approach: The highest level modules tested and integrated first

2. Bottom up approach: The lower level modules tested and integrated first

3. Sandwich or Hybrid approach: Combination of Top down and Bottom up integration testing

4. Big Bang approach: Highest level and lower level modules integrated

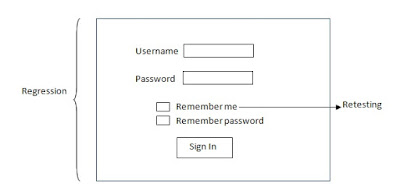

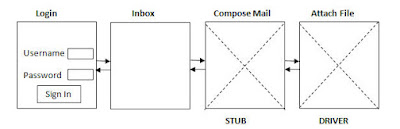

1. Top Down approach: In this approach when lower level components are developed they are integrated with highest level components. It is known as top down or top to bottom approach. Top-down testing mainly needs for the testing team to identify what is important and what is least important modules, then the most important modules are worked on first. In this approach there is a possibility that a lower level module is missing or not yet developed which affect the integration between the other modules. Top Down approach is like a Binary tree where testing starts from top to roots.

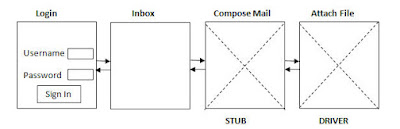

In this situation to make the integration possible developer develop the temporary dummy program that is utilised in place of missing module known as ‘STUB’ for the smooth running of integration testing.

In top down approach the decision making occurs at the upper level in the hierarchy and encountered first. The main advantage of the Top Down approach it is easier to find a missing branch link.

The problem occurs in this hierarchy when processing the lower level components to adequately test highest levels. As stubs are used to replace lower level components at the beginning of top down approach; no significant data can flow between the modules. Lower level modules may not be tested as thoroughly as the upper level modules.

|

| Top Down Integration Testing |

|

|

|

2. Bottom Up approach: Bottom Up approach is the opposite to top down where lower level components are tested first and proceed upwards to the higher level components. In this approach when highest level components are developed they are integrated with the lower level components. It is known as bottom up or lowers to highest approach. There is possibility of missing highest level components which affects the integration process. The main advantage of the Bottom Up approach is that defects are more easily found.

In this situation to make the integration possible developer develop the temporary dummy program that is utilised in place of missing module known as ‘DRIVER’.

|

| Bottom Up Integration Testing |

3. Sandwich or Hybrid approach: In this the combination of Top Down and Bottom Up approach is used. If some of the highest level components and some of the lower level components are not developed yet then the developer develops the temporary program ‘STUBS’ and ‘DRIVERS’ for smooth running of the integration testing.

|

| Sandwich Integration Testing |

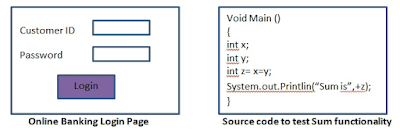

4. Big Bang approach: This approach is applicable when all the highest and lower level components are developed to integrate all the modules and make it as single application. The Big Bang method is very effective for saving time in the integration testing process. Big Bang Integration testing is also called Usage Model testing. Usage Model Testing can be used in both hardware and software integration testing. The source behind this type of integration testing is to run user like workloads in integrated user like environments.

The testing team has to wait till all the modules to be developed to integrate in order to do big bang testing so there will be a lot of delay to start testing. The cost to fix errors identified is high as they are identified late stage and difficult to identify the fault.